Examples

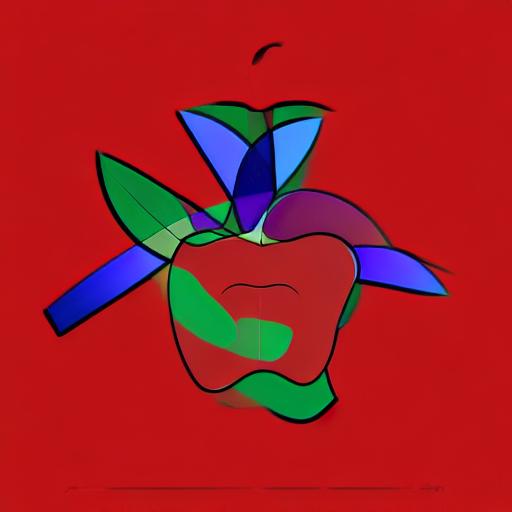

To see how good code can be is difficult. For this example, the image generation tool Stable Diffusion was used. The tool was given three pieces of information.

- Random Seed

- Prompt text

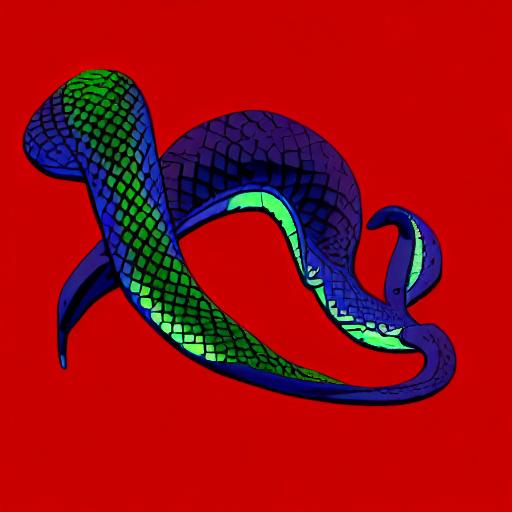

- A guide image

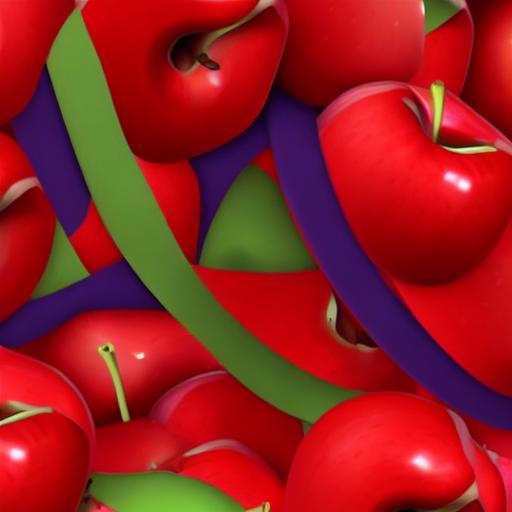

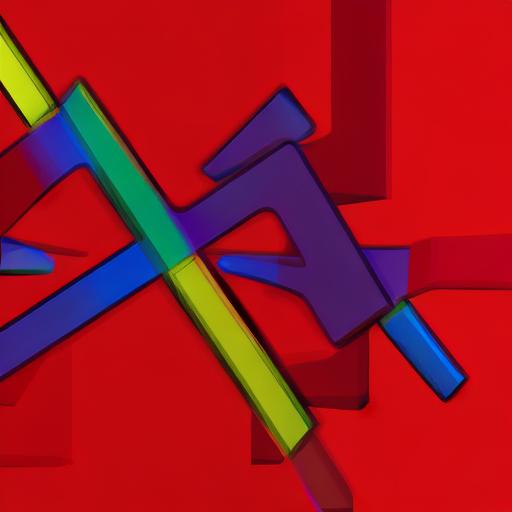

In the following sets of three images, note how the Stable Diffusion tool has used the prompt and guide image to query it's training data. If the prompt, seed and guide image are the same each time, the same image will be generated. The image generation is deterministic. So if this was about code generation, then the prompt would be about the problem and the generated code would be based on the relevant training data. So the output would be based on code sequences that had been tagged with information that the LLM has extracted from the prompt text. Hopefully the output images here show how the text generated by tools such as ChatGPT and Bard could appear to be reasonable answers to the prompt but may have issues.